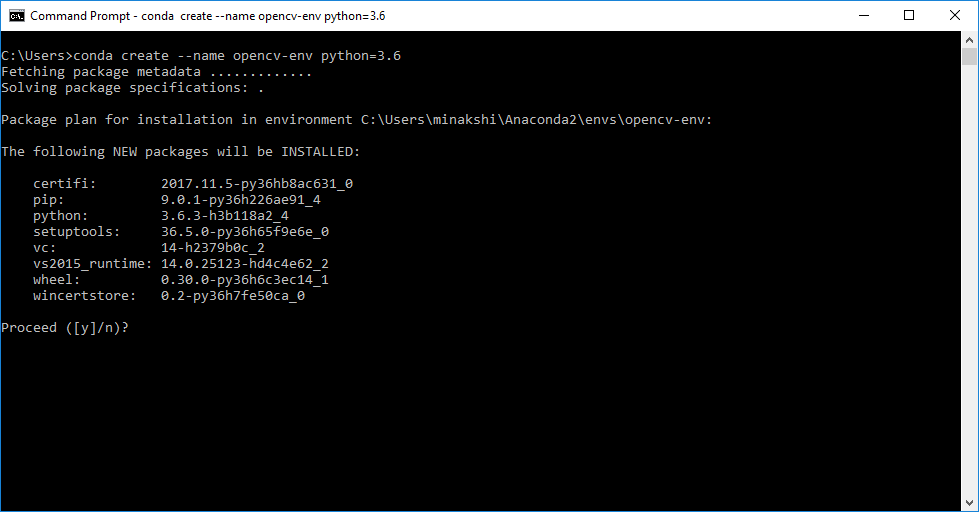

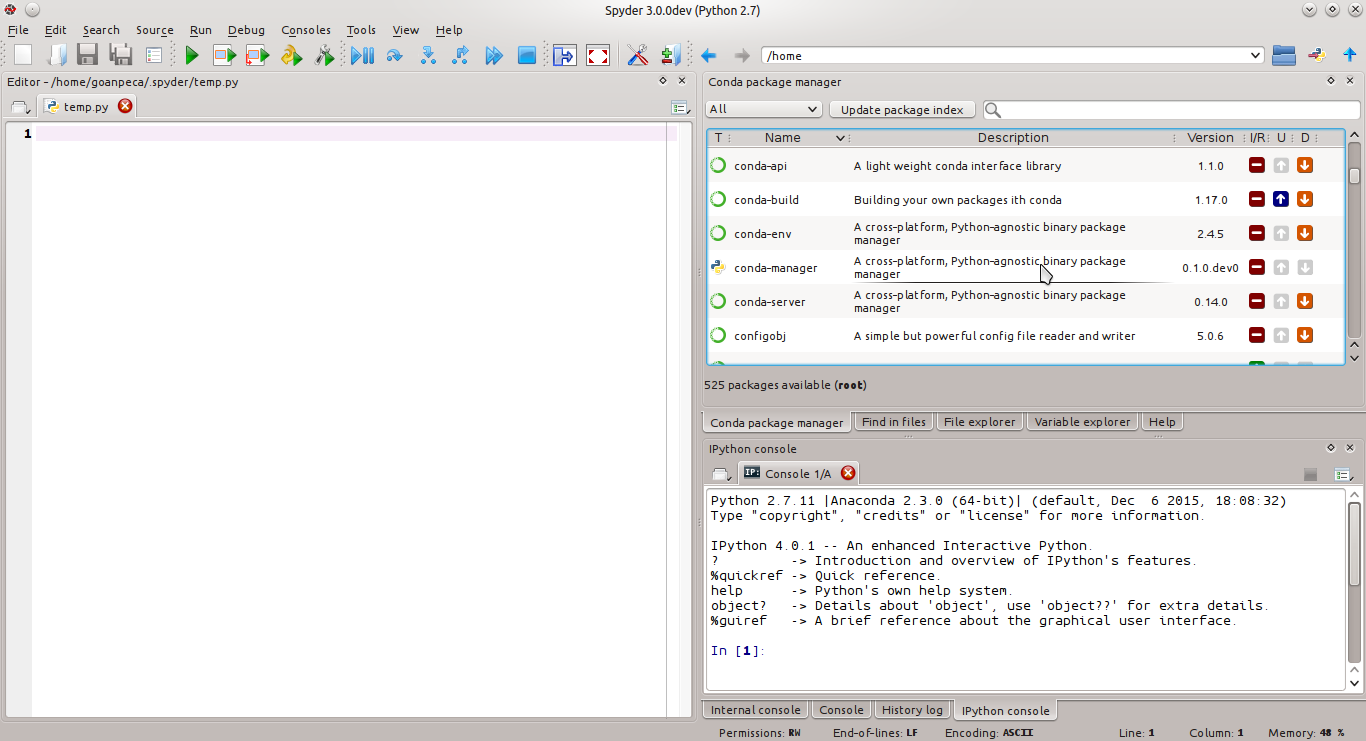

Since Spark runs in JVM, you will need Java on your machine. You can do it either by creating conda environment, e.g.: If you for some reason need to use the older version of Spark, make sure you have older Python than 3.6. Warning! There is a PySpark issue with Python 3.6 (and up), which has been fixed in Spark 2.1.1. Since I am mostly doing Data Science with PySpark, I suggest Anaconda by Continuum Analytics, as it will have most of the things you would need in the future.

CONDA INSTALL USE LOCAL CODE

To code anything in Python, you would need Python interpreter first.

CONDA INSTALL USE LOCAL HOW TO

Also, we will give some tips to often neglected Windows audience on how to run PySpark on your favourite system. This will allow you to better start and develop PySpark applications and analysis, follow along tutorials and experiment in general, without the need (and cost) of running a separate cluster. In this post I will walk you through all the typical local setup of PySpark to work on your own machine. This has changed recently as, finally, PySpark has been added to Python Package Index PyPI and, thus, it become much easier. Despite the fact, that Python is present in Apache Spark from almost the beginning of the project (version 0.7.0 to be exact), the installation was not exactly the pip-install type of setup Python community is used to. For both our training as well as analysis and development in SigDelta, we often use Apache Spark’s Python API, aka PySpark.

0 kommentar(er)

0 kommentar(er)